Summary

The proliferation of AI is not up for question, but successful implementation remains an area of speculation and assumptions. Why do so many companies that run AI pilots never end up scaling their implementations in production The answer might lie in their approach to AI maintainability.

AI platforms and solutions no longer feature in the ‘emerging technology’ category. From the experimental phase to the mainstream, intelligent solutions are now a mainstay in enterprise growth and digital transformation plans. AI is being used to drive better insights, automate technical and business workflows, and create an ecosystem of continuous learning. Across sectors, digital transformation and the adoption of AI-infused automation solutions have driven the proliferation of machine learning (ML) and deep learning (DL) techniques along with the latest iterations of Natural Language Understanding (NLU), Natural Language Processing (NLP), and Natural Language Generation (NLG). With developments such as computer vision algorithms for image processing, these technologies enable better decision-making, greater efficiency, and a substantial reduction in manual effort. The result is a more innovative and leaner enterprise capable of balancing stability, scalability, and innovation.

Several enterprise AI products in the market offer or claim to offer end-to-end AI platform solutions. These offerings typically allow businesses to leverage their AI capabilities while minimizing the need for technical expertise, allowing even developers with basic application development skills to build tools and solutions. The platforms incorporate a hardware and software architecture for a framework that supports AI application software. The software then uses various AI components like those mentioned above to derive and deploy cognitive solutions capable of mimicking the human mind and actions. Some AI platforms are generic and built to integrate with many AI technologies, while others solve particular business issues using a specific AI modality. This range of choice, ease of use, and incrementally valuable output make AI platforms an essential tool for enterprise growth.

The proliferation of AI, however, is not without its challenges. Much has been written about AI pilots that fail or ideas that never move to production, but this article is more concerned with sustaining and scaling the success of those that do.

Maintaining Balance – Challenges of Maintenance

For AI models to be successfully utilized, they must offer consistent and accurate output throughout their life cycle. The issue doesn’t always lie with the production iteration, but how it is maintained. The fact of the matter is that if we deploy AI solutions without management and maintenance, they cannot meet expectations.ⅱ

Let’s look at big data management in AI systems as an example. It is relatively simple to create data pipelines or add big data to the Hadoop ecosystem or any other big data storage via an AI platform. Enterprises can also try prebuilt advanced ML algorithms on their data before validating predicted outcomes. They can then move validated AI models to production. Enterprises can iterate validated models for all identified best fit AI use cases, testing and moving them to production for business consumption. Over time, this kind of iterative approach creates a scenario where multiple AI models are working simultaneously. It is here that the complexity starts to set in. With intelligent solutions gathering information at every user touchpoint, enterprises are facing a data explosion. Companies don’t just need to collect data but need to make sense of it to turn it into valuable insights. Trying to move all the data to a central location and harnessing it for smarter business strategies is the top priority for most enterprises today. The job is easier said than done, and it is not surprising that less than one-fourth of senior C-executives report progress in driving their organizations to a data-driven approach.ⅰ

Now, running the automations needed by AI models is handled directly by the AI platform. As the model keeps running, real-time data changes and its performance starts to deteriorate. When this happens, the business raises concerns about accuracy drops and prediction errors, questioning whether the prediction system can handle requests. Implementation teams must have a thorough understanding of data volatility, revalidate their initial assumptions, preprocessing, and feed more training samples or re-train the model. There are other possibilities such as changes in data creating biases, unacceptable outcomes, or inexplicable decisions that require the reconfiguration of parameters, retraining, and sometimes even code changes for the redeployment of models to production. Given the number of times this can happen, how can enterprises keep track of the changes made to AI models? This is just one example. Let’s look at a few other issues before discussing a solution framework.

-

The lack of operational capability to create and deploy an AI model

-

Tackling post-production AI model output inaccuracy in some business scenarios

-

The absence of traceability in AI model versions once moved to production

-

The absence of end-to-end data governance and compliance and absence of governance strategy and policy

-

The lack of interpretability and explainability

-

The inability to manage structural bias and unintentional bias in model outcomes

The Maintenance Trifecta – Maintenance Solutions Designed for Long-term Value

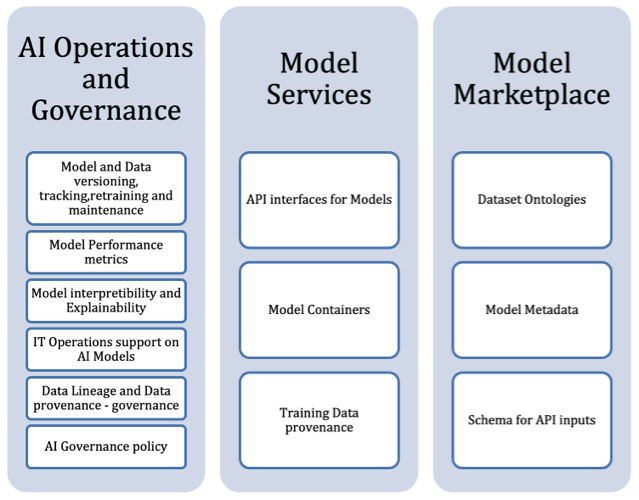

Each of these issues can be tackled with a robust maintenance framework. Consider defining your thinking under three specific areas of impact designed for better enterprise AI implementations:

-

AI Model Operations and Governance

AI Model Operations is similar to existing IT Operations frameworks in that it is the management of the productized AI application lifecycle by operations teams. AI Model Operations, however, differs from other applications in a few ways. Because ML solutions are not just code-based tools but a combination of the code, data used in training, and the model, all three elements must be managed together. DevOps, data engineering, and the ML/DL model together form the scope of AI Model Operations alongside monitoring AI automation jobs and infrastructure monitoring tasks. Some of the additional aspects of AI Model Operations include:

-

Model and Data Versioning, Tracking, Retraining, and Maintenance

AI models and associate data with the right versions need to be created, numbered, tested, built, deployed to production, tracked, and monitored for production support and ease of maintenance. The training data used and the updated model should be easily identifiable, and this necessitates a defined version policy that comprises all the ML artifacts.

-

Model Performance Metrics

The model inputs, outputs and decisions, and interpretability metrics need to be monitored as model coefficients. Various tools can examine these metrics. For instance, ELI5 is a Python package that helps debug machine learning classifiers and explains their predictions. TensorBoard is another visualization tool used to track and examine both training and real-time model performance. Local Interpretable Model-Agnostic Explanations (LIME) also need to be monitored and reviewed to check if model fairness has been maintained or compromised. Enterprises must also measure model runtime success rates to assess whether AI model predictions meet the acceptable accuracy levels. A re-evaluation of the model-data (schema), AI model (algorithms and training), and code (business requirements and model configurations) must follow any deviation or performance below the benchmark.

-

Model Interpretability and Explainability

As AI models increasingly influence decision-making, there is a need to provide evidence to trust their output. Companies may frequently be held accountable for inaccurate, biased, or simply unfounded AI results from a compliance standpoint. With domains like healthcare, transparency and accountability are crucial, and that’s why AI models need to offer interpretability and explainability. Model interpretability can predict the likely result of changes in input or algorithmic parameters, while explainability is the ability to understand all AI outputs. Augmenting algorithmic generalization, understanding the importance of features can improve these elements. Enterprises should also consider using LIME and DeepLIFT models to explain model predictions to users.

-

Data Governance – Data Lineage and Provenance

Data governance and compliance are the latest and arguably the most significantⅲ addition to AI solution maintenance. AI solutions today must offer complete traceability of data sources, training data, and the model used. The records should provide a comprehensive view of the data, all the way from model to output.

-

AI Governance Policy

Enterprises must develop an organizational AI model governance policy over and an overarching global AI governance framework. The policy must cover the ethical use of AI and have the proper controls for AI implementation and usage.

-

Loved what you read?

Get practical thought leadership articles on AI and Automation delivered to your inbox

Loved what you read?

Get practical thought leadership articles on AI and Automation delivered to your inbox

-

Model Services

-

API Interfaces for Models

Many AI solution providers now offer API access to their ML solutions for third-party integrations. This is no different from providing REST APIs for other applications. Many ML solutions can be developed, published, and consumed as the business need arises, and enterprises can consider enabling free or paid access to these solutions.

-

Model Containers

Another way of sharing ML models is to develop them on a docker. Using predetermined infrastructure and ML packages as a base docker image, enterprises can build a model on top and share them with clients as docker images. Model containers are another way to productionize AI models, and automating their deployment should be considered to save time and effort while eliminating inaccuracies.

-

Training Data Provenance

It is essential to understand the nature of the data deployed for ML modeling, whether it’s primary or secondary, raw or processed, and structured or unstructured. Data provenance documents the inputs, entities, systems, and processes that influence the data of interest, providing a historical record of the data and its origins, which is crucial to ensure success in AI model management.

-

-

Model Marketplace

-

Data Set Ontologies

As all AI models are purely data-driven, having domain-specific ontologies that solve business problems is critical. Ontologies are schemas for datasets and help enterprises use AI solutions with preset published datasets. For better-maintained ontologies, enterprises should look to version them correctly and publish them on marketplaces.

-

Model Metadata Management

Metadata is the ‘who, what, where, when, why, and how’ of data. Existing metadata management systems can be applied to AI model metadata management.

-

Schema for API Inputs

The scheme for exposed/published REST APIs is essential to understand the structure of data. There are many formats that support schema for APIs and enterprises should choose one that aligns with their needs, tools, and frameworks.

-

Maintaining Impact – The RoI of Better AI Model Operations

The success of AI implementations is essential to scaling an enterprise in the current environment, reducing operating expenses, and generating top-line spikes over 20%.ⅳ Better maintenance can help AI initiatives enjoy greater leadership trust and organization support, ensuring long-term value generation and growth. With the industry moving on from its experimentation phase to effectiveness, technology leaders and solution partners capable of extracting tangible results from AI implementations will set the foundation for exponential scale as the proliferation of AI continues.

References:

- https://www.forbes.com/sites/randybean/2021/01/03/decade-of-investment-in-big-data-and-ai-yield-mixed-results/?sh=1bf9363c409e

- https://www.modelop.com/blog/are-your-model-governance-practices-ai-ready/

- https://www.oreilly.com/radar/what-are-model-governance-and-model-operations/

- https://www.mckinsey.com/~/media/McKinsey/Business%20Functions/Risk/Our%20Insights/The%20evolution%20of%20model%20risk%20management/The-evolution-of-model-risk-management.pdf