Make your data work for you

A technical article on data harmonization

December 2019

In a world driven by technology, data has fast become the planet’s most valuable resource. For enterprises, the ability to harness data is as valuable as it is complicated. With digitization no longer the exception but the norm, enterprises are becoming data-driven and, more importantly, data-dependent. At the heart of digital transformation, data can power businesses into the future, unlocking business potential at every stage of the value chain. On one hand, high-quality data supports decision-making with actionable insights, and on the other, it offers businesses a competitive advantage by enabling transformative technologies like predictive analytics. If the value is so evident, why aren’t enterprises able to leverage data at scale? The answer lies in the challenges.

UNPACKING THE DATA CONUNDRUM

The struggle is real. A simplistic view is that because of the large volumes of internal and external data that enterprises generate, it should be easy for them to extract insights to drive intelligent operations. There is one caveat. Size does not mean structure. To build a holistic, consistent, and insightful view of the organization, enterprises must first organize their data. Several obstacles hinder this process:

By Sagar Sarma

AVP – Senior Director,

Head – Product Development,

Business Applications,

EdgeVerve Systems Ltd. (An Infosys Company)

Data generation in siloed systems

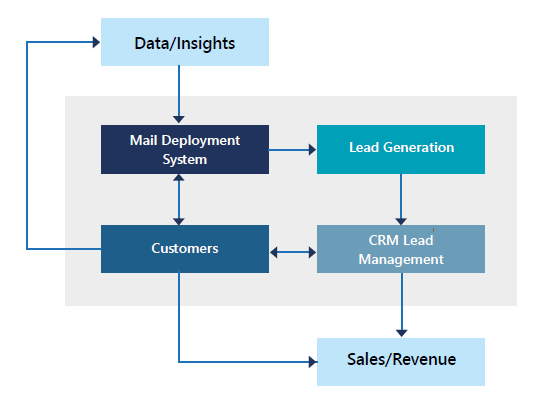

Organizations generate data from siloed systems. For example, marketing automation tools like Oracle Eloqua or CRM systems like Microsoft Dynamics have different representations of data for the same individual. For any campaign analysis, you would have to connect these systems to generate an accurate view for the same prospect or customer.

In this case, the absence of standardized fields becomes a constraint. Banks are another example.

Fig.1 A representation of siloed network of marketing systems

By storing multiple addresses of the same customer for different services, banks struggle to connect the data for the same customer and consequently lose out on business opportunities.

Disparity of external sources

The inconsistency issue is exacerbated when enterprises connect organization-generated data to external sources. In fact, this problem is harder to navigate than the siloed data generation within enterprises.

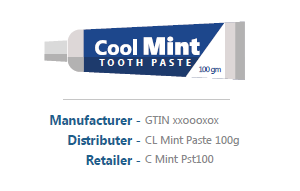

Fig.2 A representation of different notations across the value chain for the same product

For instance, in trade-related data, in spite of having a GTIN (Global Trade Item Number), the same product is represented by different notations across manufacturers, distributors, and retailers. The resulting complexity leads to further inaccuracies and even more chaos.

The inefficiency of traditional methods

While consistently delivering the innovation narrative, a majority of enterprises continue to use human intelligence as their primary recourse to solve data standardization problems. Businesses invest large sums of money on requirements for combined data and reports, which mostly translates into a set of rules for an extensive manual review. Depending on the data quality, a few scripts may be written to connect data fields across systems, but the rest of the process is mostly inefficient. Connecting semi-structured or unstructured data poses even more significant problems:

- By not conducting a systemic study to find ways to unify data across all levels and systems, enterprises miss out on data analysis opportunities

- Manual data processing comes with inherent bias and human inaccuracy, and this results in high resource arbitrage and error rates

ORDER THROUGH INTELLIGENCE

From these challenges, it is evident why enterprises struggle to take advantage of their rich and nuanced information sources. Data is available but simply not ready for use. The solution – data harmonization. Data harmonization brings consistency to enterprise data management by contextualizing business data for each enterprise. By leveraging automation and machine learning techniques, this process makes data insight-ready through a clean, efficient, and effective mechanism.

At EdgeVerve, we understand that the best enterprises need to be supported by the best technology. That’s why we built EdgeVerve TradeEdge Data Harmony – a cloud based solution that utilizes best-in-class machine learning and AI techniques to harmonize multiple different external data sources in parallel.

A LOOK UNDER THE HOOD

Using the C-Score to eliminate the need for rule-based processes, TradeEdge Data Harmony is a set of progressively learning algorithms that builds and operates on taxonomy-based data dictionaries. Data dictionaries are created using one of the data sources as reference data.

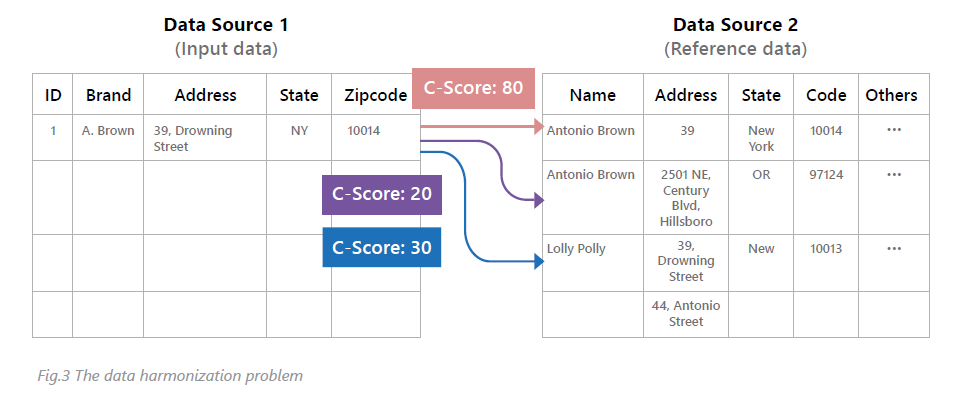

In the example in Figure 3 below, Data Source 2 has been considered reference data and the dictionaries will be built using the fields of this source.

The customer provided taxonomy is a semi-structured set of words consisting of nouns and alphanumeric sets. Due to this variation, our harmonization solution does not follow linguistic grammar to apply conventional NLP techniques. Additionally, we have found techniques like stop word elimination (e.g. ‘the’ ‘in’ and others) to be ineffective and have instead derived inflective words using stemming and lemmatization.

The problem of data harmonization is the mapping of one row of input (Data Source 1) to one or more rows of reference data (Data Source 2). We address this issue by assigning a C-Score to fields where there is a strong correlation. The higher the C-Score, the stronger the relation. This approach alleviates the need to state rules to express which column of input data needs to be mapped to a column of reference since the system automatically determines data to match and augments the C-Score.

LEARNING FUNCTIONS AND IMPROVEMENT OVER TIME

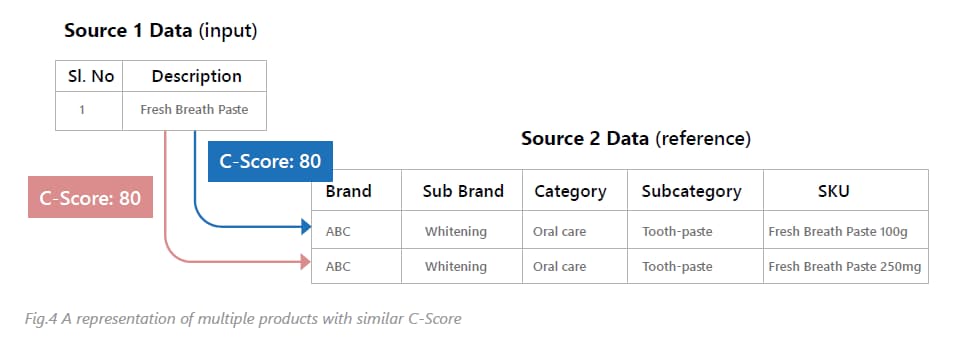

The address mapping problem statement described above is simple, in the sense that a person’s name and address are unique and it is straightforward to find one row in the reference data where the C-Score is the highest. While this is an example of intelligent automation where the problem is addressed without human intervention, there are other cases such as trade data harmonization (see Figure 4). Here, there are hundreds of similar types of products that manufacturers build and incoming data may produce the same C-Score for many rows in the reference data. In this system, human guidance is applied followed by feedback learning to improve results significantly.

PREPARING A DATA DICTIONARY

Automatic taxonomy is constructed using the reference data by creating a set of dictionaries, and this is the first step of data harmonization. Below is a list of the dictionaries created:

Language dictionary

Spelling dictionary

Abbreviation dictionary

Split Merge words dictionary

Correlated words dictionary

n-grams

In summary, using these dictionaries captures information about:

Importance of words: Using TF-IDF algorithms as the base, Bag-Of-Word constructions can be used to create important word and column vectors.

Words Contexts: “Antonio” is related to both “Brown” and “Street”. This relationship needs to be expressed in the form of a graph to traverse quickly. Error Corrections: The word “Downing” may be written as “Downin”.

Word sequencing using n-grams: “San Francisco”, a 2-gram can be chunked together as one word.

Complex Cases of Dictionary Creation

One of the critical capabilities that we have developed is an algorithm with the ability to identify abbreviations. Say there are two words – “Nutritious” and “NTRS” – that are found in the same row of the reference input. The system then identifies “NTRS” as an abbreviation of “Nutritious” and stores this relationship for later use, helping the algorithm to improve accuracy when future input data only includes the abbreviation.

Input Data Preparation

Our method harmonizes the entire input data preparation process. We do this in a few ways.

Automation Featurization: The input data requires multi-level processing to be converted to a form that can be mapped to the reference data. The first step is to combine all the columns in an input row into a set of input tokens. These tokens are then incrementally processed using the dictionaries to derive a final token list. Examples of such processing include:

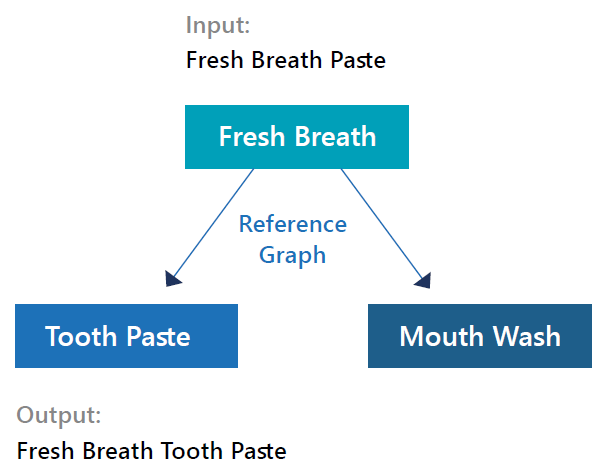

Take a look at the following example. “Fresh Breath”, in this case, could mean either of the two products below.

The challenges with trade data are many. Each channel partner has its own way of identifying nomenclature and structuring the data. Mapping competitor’s data to ones‘ own data set requires specialized expertise and is not a trivial knowledge too. Data harmonization at this stage becomes critical.

Context Identification: Context identification implies the identifying of a relationship between the input features and the reference rows. The main challenge here is that the column heads in Source 1 (input) and Source 2 (reference) may not have any similarity, and this information isn’t available. The system then relies on matching every column of input data to every column in the reference data across all rows. This process would require an enormous amount of computing power. We overcome this need by using the data dictionaries prepared in the previous step to quickly identify a relation with a cell in the reference data, speeding up the processing of input data. If the input is “Fresh Breath paste”, then the dictionaries will be crawled as shown in the above graph to identify the relation.

Mapper Logic

The mapper function identifies the number of tokens in an input row that match each of the reference rows. The C-Score computation is based on:

First Responder and Feedback Learning

For a complex data set, when the C-Score value for multiple reference rows equals one input row, the first responder system is designed for human involvement. The system learns by automatically adjusting the importance of the data dictionaries so that learning systems provide accuracy in future instances.

In a highly competitive business environment, enterprises can use every advantage they can get. In recent times, much of the attention has been directed to digital transformation and emerging technologies such as machine learning, predictive analytics, and AI. However, without data harmonization, the effectiveness of each of these interventions is greatly reduced.

Through data harmonization, enterprise data can be more consistent, complete, and richer, providing a single source of truth that offers valuable business insights.

The resulting clarity allows enterprises to explore new techniques, make better decisions, and be more agile. TradeEdge Data Harmony’s ability to drive intuitive data harmonization through intelligent applications is specifically designed to ensure that your business doesn’t just survive, but thrives.

Share This Story

By Sagar Sarma

AVP – Senior Director,

Head – Product Development,

Business Applications,

EdgeVerve Systems Ltd. (An Infosys Company)