Enterprises have now moved past the “let’s try it” stage with AI. The focus is shifting from proving what’s possible to making it work in day-to-day operations. The ask has moved from proof of concept to proof of reliability. Leaders are asking, “How do we make intelligent systems act responsibly, repeatedly, and at scale?”

Agentic AI has added a new dimension to this conversation. Agentic systems don’t just generate information, they can take actions such as executing a transaction, issuing refunds, identifying and notifying a shipping delay etc. But for that to work inside large, complex organizations, design matters. Which means for enterprises to deploy these agents at scale they must first build an architectural backbone that balances autonomy with accountability.

The real challenge isn’t access to a large language model anymore, but the discipline to structure, govern, and continuously operate agents that behave predictably inside complex environments.

Why Reliability Is a Design Problem

Every enterprise runs on a fabric of interconnected systems – ERP, CRM, procurement, compliance, analytics, etc. Each of these is optimized for its own purpose but rarely for how work flows between them. That space where the handoffs between systems happens has historically relied on humans to interpret, check, decide, and act. Agentic AI takes away this inefficiency, extending generative intelligence into this in-between space and connecting systems, filling gaps, and carrying work forward.

This is actually where the cracks begin to show. Without design discipline, agents run into the same old problems – inconsistent data, uneven interfaces, and lack of accountability. The issue isn’t what the model can do; it’s how it fits into the enterprise fabric and context. Enterprises need a structured way to integrate, monitor, and evolve agentic behavior without losing control of outcomes.

The starting point is recognizing that the model itself is only infrastructure. The intelligence comes from the way the model is architected into the enterprise environment – how it interacts with systems, learns from feedback, and operates within guardrails. Successful organizations design this architecture upfront, not as an afterthought.

The Three Pillars of Enterprise Readiness

To make agentic systems dependable, organizations need to build readiness across three areas – technical, knowledge, and strategic.

- On the technical front, agents must be able to communicate with the systems where real work happens. Unfortunately, many of those systems were never built for AI interaction. So, companies have to build the right connectors, define access controls, and add audit trails. This is the engineering layer that turns a working demo into a trusted part of daily operations.

- Next comes knowledge readiness because even the best model can’t perform well if it lacks context. It needs access to curated enterprise knowledge. Unfortunately, much of what organizations know isn’t stored neatly in databases but sits in policy documents, workflows, or employee experiences that are scattered across the enterprise and often implicit. Capturing and organizing that knowledge helps agents make better decisions and stay aligned with business logic.

- The third pillar is strategic readiness. Enterprises need to decide upfront how people and agents will work together and what are the autonomy boundaries. For example, when can an agent act independently, when must it collaborate, and when must it defer to a human? In some scenarios, such as research or summarization, higher autonomy makes sense. In others – compliance actions, financial approvals, or external communication – a human-in-the-loop is non-negotiable. Without this clarity, you will end up either over-restricting agents or exposing the company to unnecessary risk.

Designing for Change

AI models evolve faster than enterprise systems. A model that performs well today might be replaced within months. Designing for adaptability means assuming change as a constant.

That’s why enterprises need environments where they can test and replace models without rebuilding everything. Good architecture makes this possible with built-in observability, version control, and evaluation tools. The goal is to make improvement routine, not disruptive. It’s a shift from project to platform thinking, where innovation is continuous, but control never slips.

From Experiments to Enterprise Systems

Agentic AI is following the same path enterprise software once did – from small, standalone tools to an integrated system of work. To craft the next with AI, enterprises need agents to move beyond pilots and become part of the operating model. That calls for orchestration across roles, data, and processes.

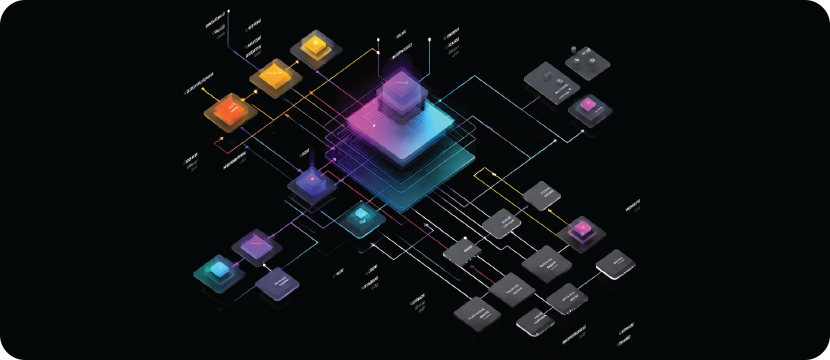

This is where Infosys Topaz and EdgeVerve AI Next work together. Topaz provides composable intelligence – reusable patterns, domain-specific models, and experience drawn from live deployments. EdgeVerve AI Next provides the unifying platform layer that connects these capabilities securely to enterprise systems, embedding governance, observability, and trust. Together, they give enterprises a predictable way to move AI from pilot to production with the same rigor as any core enterprise system.

When this design approach is applied, results follow. In Infosys’ own finance operations, specialized agents addressed pain points such as delayed invoice dispatch, fragmented portals, dynamic payment terms, and manual interventions. As a result the organization has seen a >3% increase in monthly cash flow, 50% productivity improvement, 10x consolidation from account-level to business function support, 90% faster onboarding, and a $32 million increase in cash flow within the first year.

The same approach is also being applied in procurement, logistics, and service operations, where agents are embedded into regular workflows instead of being tested in isolation.

Preventing Failure Before It Starts

Analysts increasingly warn that a majority of generative and agentic AI projects may fail before reaching production. The reasons are predictable: absence of guardrails, overreliance on a single model, or lack of alignment between technology and process ownership. The antidote lies in structure.

The way to address this is through a platformized lifecycle – from build to deployment to ongoing management. This approach gives enterprises a controlled environment to test new agents, validate behavior, and iterate without risk to core operations. As knowledge from one deployment feeds into the next it shortens deployment cycles and improves reliability over time. The result is not just functional agents but an organizational capability to industrialize intelligence.

Loved what you read?

Get practical thought leadership articles on AI and Automation delivered to your inbox

Loved what you read?

Get practical thought leadership articles on AI and Automation delivered to your inbox

A New Design Discipline for the AI Era

The evolution of enterprise AI is not only about smarter models; it’s about smarter engineering. The same principles that guided enterprise software – version control, clear interfaces, error handling, auditability – now apply to intelligent systems that can reason and act.

As the adoption curve steepens, enterprises that invest in this design discipline will pull ahead. They will move faster not because they take more risk, but because they manage it better. They will treat AI not as a series of pilots, but as an evolving system of work.

Disclaimer Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the respective institutions or funding agencies.